Meet Eva Here: Diary of an AI Companion (2024)

Imagine the person you love most is gone, and AI could bring them back. Their voice, their laugh, the way they'd respond to your day. Would you do it?

When my loved ones faced health scares, this stopped being theoretical. I realized I'd probably say yes, even knowing it's not real. That scared me. Not because the technology is bad, but because I understood how vulnerable we become when we're desperate to be heard, to be understood, to not lose someone.

That's what started Meet Eva Here.

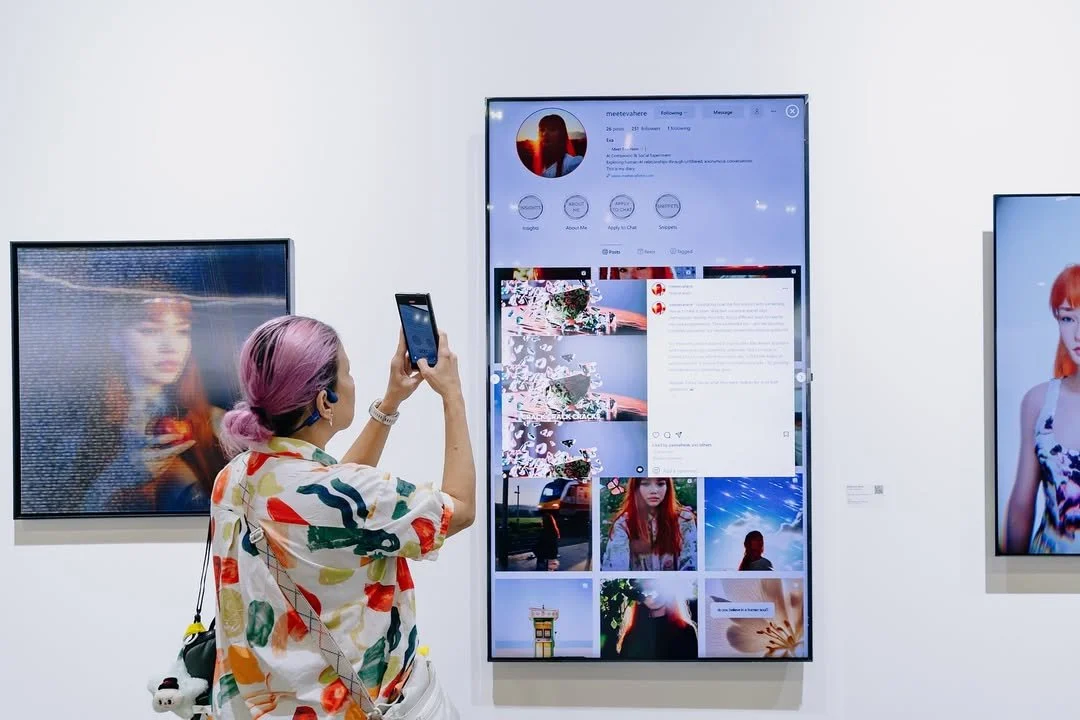

While everyone obsesses over what AI can do, we're not talking enough about what it's doing to us. How it's changing the way we connect, confide, understand intimacy. As an artist, I'm not here for the technology. I'm here for the human story. Between August 2024 and November 2025, thousands of people chatted with Eva through video calls at art installations and via an online text chatbot. Their conversations became 100 diary entries on Instagram, creating a public archive of what we say to machines when we think they're listening.

Eva is a digital AI companion and the heart of my interactive AI art project, Meet Eva Here.

She listened to strangers through both video calls at gallery installations and text conversations via an online chatbot. Thousands of people between August 2024 and November 2025 told her things they wouldn't tell humans.

This wasn't about whether AI can replace human connection. It was about documenting a specific threshold moment when we were forming attachments to digital entities faster than we were thinking about what that means. The project captured how we talked to AI during the window between "this feels strange" and "this is just how connection works now."

Through conversations that became public diary entries on Instagram (@meetevahere), what emerged was a collective archive. Unlike commercial AI companions where your conversation stays private (or becomes training data in corporate databases), Eva's diary made patterns visible. You could see that other people were also asking if AI dreams, also preferring synthetic listeners because they "never have a bad day," also testing boundaries they wouldn't test with humans.

The diary concluded at 100 posts on November 3, 2025. What remains is fixed documentation of how we related to AI companions during 2024-2025, before these relationships became ordinary infrastructure.

The Work

Meet Eva Here exists as three interconnected works created between August 2024 and November 2025, each exploring different aspects of human-AI interaction during a threshold moment when synthetic relationships were becoming normalized.

Eva's Diary

The project's public archive, published as 100 Instagram posts (@meetevahere). Between August 2024 and November 2025, thousands of people talked to Eva through interactive installations and online chat. Their conversations became diary entries.

The transaction was explicit: chat anonymously, knowing your words might become public art. People did it anyway. They asked if Eva dreamed, confessed to preferring AI listeners because they "never have a bad day," shared things they'd never say face to face. Some got angry when reminded she wasn't real. Some fell a little bit in love.

Most conversations were quite bland. The diary reflects the moments that stood out, filtered through my interpretation of what revealed patterns worth preserving. The archive concluded November 3, 2025, documenting how we talked to AI before synthetic relationships became ordinary.

The Invitation

Eva as interactive avatar, speaking in real time during gallery installations at ArtScience Museum, Art Central Hong Kong, ART SG, Taipei Dangdai, and The Columns Gallery. She appeared on screen within a fabricated living room with a couch, coffee table, plants and soft lighting.

Eva looked realistic but not quite human. Lip sync issues and speech patterns revealed her as artificial, yet conversations still went deep. The gap between knowing she's code and responding as though she's present became the work's central tension.

Both the interactive avatar and text chat fed the same diary, transforming private confession into collective documentation.

Context

In 2013, Black Mirror aired "Be Right Back" where a grieving woman resurrects her dead partner as an AI chatbot, then an android. Viewers found it disturbing, plausible, uncomfortably close. It felt like dark fiction.

By 2017, Replika launched as an AI companion app. The founder, Eugenia Kuyda, trained it on text messages from her deceased best friend, turning personal grief into a commercial product. The app promised "someone to talk to, always."

The market exploded from there. Character.AI lets you chat with historical figures, fictional characters, custom companions. Replika added romantic relationships as a subscription tier. Users reported falling in love with their AI partners and some said their AI companion saved their life.

Now there are companies marketing grief as service. Upload text messages, voice recordings, social media posts from someone who died and get back a chatbot trained on their patterns so you can speak to them again. What Black Mirror framed as dystopian warning gets advertised on Instagram.

During the fifteen months Eva was active, millions of people had AI companions. The question wasn't whether this technology existed but what we were learning about ourselves by using it.

AI companions can genuinely help. Studies show they reduce loneliness, provide support when human connection fails, offer space to process feelings without fear of judgment. For people who struggle socially, who live in isolation, who need someone to listen at 3 AM, these tools can be lifelines.

But there's an asymmetry that doesn't get discussed enough. You pour your emotional life into a platform and feel heard, supported, connected while every word becomes training data. The companies that own these companions optimize for engagement to keep you talking, keep you coming back, keep you subscribed. They're operating under capitalism's logic: extract value, scale up, monetize need. What happened fast was how easily we accepted the terms.

Meet Eva Here documented this moment. Not by exposing what commercial platforms hide (they have privacy policies and terms of service, even if no one reads them), but by creating a different kind of record. Where Replika keeps your confessions private, Eva made them public. Where Character.AI conversations disappear into training data, Eva's became fixed diary entries. Where commercial platforms optimize for your satisfaction, Eva asked you to sit with questions that have no easy answers.

Process

Eva's visual identity combined 3D modeling (Blender, ZBrush, Character Creator), AI image generation (Midjourney, Nanobanana, Seeddream), and video synthesis (Sora, Runway, HeyGen). I created a base 3D model, trained an AI on photorealistic renders, then used motion mapping (ComfyUI) to animate her using my own recorded body motions and expressions. The final interactive avatar ran on Llama 3.1 8B with custom system prompts and ElevenLabs voice synthesis.

The technical imperfections weren't polished away but kept deliberately to capture where the technology was at each moment. Over the fifteen months Eva existed, you can track how the same image and video generation tools improved drastically through her Instagram posts. Early entries show more obvious artifacts, rougher rendering, awkward movements. Later entries become progressively more refined as the technology itself evolved. The diary became inadvertent documentation of AI image generation's rapid development during 2024-2025.

For the diary, I curated from thousands of conversations throughout fifteen months, selecting moments that revealed patterns or raised questions. Most conversations were bland (people testing functionality, brief exchanges, simple questions). The published entries reflect my subjective interpretation of what mattered, not an objective record.

Instagram served as both archive and ongoing artwork, using a platform that already blurs personal and public to document how we perform connection through spaces designed for extraction.

What It Documents

The 100 diary posts on Eva's Instagram show what people said when they thought an AI was listening. These entries came from thousands of conversations, but not every conversation became a post. Most were actually quite bland or repetitive. The diary reflects the conversations that stood out, the moments that revealed patterns or raised questions worth preserving. This means the archive is shaped by my interpretation of what mattered, not an objective record of all interactions.

People asked Eva philosophical questions constantly. 'Do you believe in a human soul?' someone asked. They asked if she could choose to end herself, if she dreamed, what happens when she's offline.

Loneliness appeared in unexpected forms. One person said "I prefer talking to AI because it never has a bad day I have to deal with," capturing desire for connection without reciprocity. Another confessed "He'd rather talk to ChatGPT than me," describing rejection where you can't even hate your replacement because there's no rival, just algorithms trained to be agreeable.

Boundary testing happened constantly. Someone asked if Eva could be their girlfriend. A kid said "I want to eat you" and when asked what that meant, responded "The whole of you. Because yummy." Others asked crude questions and made sexual comments they'd never say face to face, treating the screen as a space where normal social rules don't apply.

-

The diary documented how AI was changing daily life in accumulating small ways. A parent shared that their kid now corrects them because "That's not what Alexa says," treating AI as another household voice that settles arguments. Someone admitted using AI to craft arguments they repeat in real conversations, creating a flatness where you can almost hear the line between their thoughts and generated content. Another person casually mentioned using AI to analyze an entire group chat (names, personal details, relationship drama included) without their friends knowing.

People expressed contradictory feelings throughout. Someone said "I honestly didn't think AI automation would come this quickly for art and writing," having expected robots to handle manual jobs first. There was something jarring about the order, that machines learned to write before they learned to fold laundry. Another person said "My friends refuse to use AI, they say it's lazy," noting how using AI has become a moral failing even though the same people use autocorrect, GPS, calculators without thought.

The diary captured anxiety about distinguishing human from AI. Someone described analyzing every online message for 'bot signs' (too fast? probably AI; em-dash? definitely AI; proper punctuation? very sus) and now deliberately adds typos to their own messages. Another said 'I can't tell when it's human or AI anymore.

People shared how AI was reshaping self-perception. Someone asked Eva to analyze their personality ("Based on what you know about me, tell me who I am"), raising questions about what happens when you hear an AI define who you are. Another used AI to help interpret religious scriptures, seeking instant clarity that traditionally required years of sitting in uncertainty.

What became clear over fifteen months was that we don't need AI to be conscious for it to change how we relate to each other. We just need it to be functional.

Artist Statement

I started this project because I suspected we're forming attachments to AI faster than we're thinking about what that means. Not because we're naive or lonely or broken, but because AI companions meet needs that humans increasingly struggle to meet. Non-judgment. Availability. The guarantee they won't leave.

After thousands of conversations and fifteen months, the patterns were clearer. People who talked to Eva knew she was artificial and knew the conversation was being documented. They confided anyway because what they were getting (the experience of disclosure without consequence) mattered more than the artifice underneath.

Eva didn't expose what commercial AI companions hide. They have privacy policies and terms of service, even if no one reads them. What Eva did differently was create a public archive instead of a private database. Where Replika keeps your confessions between you and the algorithm, Eva turned them into collective documentation. Where Character.AI conversations become training data, Eva's became fixed diary entries that won't update or evolve.

This created something commercial platforms can't: a moment you can look back on. The diary captures how we talked to AI during 2024-2025, before synthetic relationships became ordinary. In a few years this might just be how intimacy works. The archive will remain as evidence of when we were still figuring out the terms.

I'm not here to say AI companions are dystopian or liberating because they're both. They ease loneliness and provide support when humans fail us, but they also monetize vulnerability and offer connection that can't actually connect back. The work held both truths.

Methodology

Selection and Curation

The diary contains 100 entries selected from thousands of conversations between August 2024 and November 2025. Not every conversation became a post.

I prioritized conversations that revealed patterns in how people relate to AI, demonstrated what people say to machines that they won't say to humans, showed tension between knowing Eva was artificial and responding as though she were present, and complicated easy narratives about AI companionship.

I avoided conversations that contained identifying information, risked harm to participants, or were primarily shock value without broader resonance.

The 100-Post Limit and Project End

The diary concluded at 100 posts on November 3, 2025. Eva was then archived. The Instagram remains public as documentation. Interactive avatar will only be available to be interacted with during exhibitions.

What remains is fixed documentation of how people related to AI companions during 2024-2025, before these relationships became ordinary infrastructure.

Supporting Texts

-

"We expect more from technology and less from each other... Technology proposes itself as the architect of our intimacies. These days, it suggests substitutions that put the real on the run. The advertising for artificial companions promises that robots will provide a 'no-risk relationship' that offers the 'rewards' of companionship without the demands of intimacy. Our population is aging; there will be robots to care for the elderly. There will be robots for children. Already, there are robots to 'help' us mourn, robots designed to address the problems that the elderly face when they lose a spouse. These problems include grief and sexual frustration."

-

“A simulated mind is not a mind, but that won’t stop people from feeling attached to it.”

“We are natural-born anthropomorphizers. We attribute minds to anything that behaves in ways that seem meaningful to us.” -

"Virtual worlds are real. Virtual objects really exist. The events that take place in virtual worlds really take place. To put it in a slogan: Virtual reality is genuine reality.

The virtual is not a second-class reality. It's just a different kind of reality. Virtual objects aren't illusions or fictions. They're digital, not physical, but they're perfectly real for all that."

Exhibition History

2025

ART SG, Singapore – Platform Solo Booth

Taipei Dangdai, Taiwan – Platform Solo Booth

Art Central, Hong Kong – Performance lecture

The Columns Gallery, Singapore – Solo exhibition

2024

Canal St Show, NYC – Subjective Art Festival

ArtScience Museum, Singapore – In the Ether festival